Accelerating insight synthesis with Generative AI

AI POC | Innovation

Faced with a growing volume of unstructured user research, our team piloted a GenAI-driven synthesis assistant using GPT-based tools. The goal was to reduce time-to-insight, create more consistent summaries, and test the role of AI in augmenting—not replacing—research workflows. This became the organization's first GenAI adoption pilot for research automation.

I scoped and led the POC, including prompt engineering, researcher training, and validation testing. Established review guardrails, created prompt templates, and facilitated internal adoption through demos and cross-functional sessions.

CLIENT

citizenM hotels

01. Situation

The company was generating more interviews, workshop notes, and field reports than we could synthesize manually—creating a backlog that delayed decision-making and reduced the consistency of insight outputs.

02. Task

Evaluate whether GPT-based tools could assist with synthesis by:

-

Thematically clustering unstructured inputs

-

Drafting preliminary summaries and user need statements

-

Reducing researcher effort without compromising quality

03. Action

-

Used the OpenAI API to test across 3 live research projects

-

Developed prompt templates customized for synthesis: including role framing, tone, and output formatting

-

Introduced “review flags” to help researchers identify AI-generated content requiring revision

-

Created QA checklists to evaluate output quality and accuracy

-

Delivered internal demos, gathered feedback, and iterated the system with researcher input

04. Result

-

Reduced synthesis time by ~40% on mid-sized research projects

-

Improved clarity and consistency of summary artifacts

-

Sparked broader team interest in generative AI tools for research and product workflows

-

Initiated an insight automation roadmap for future scaling

Illustrating the process

Artifacts and workflows from the GenAI synthesis pilot: showing how prompt design, human QA, and team integration came together

Manual synthesis in progress

The UX team clustering stickies and identifying patterns during a manual synthesis session

Manual synthesis session with the research team. The patterns surfaced here were later compared to GPT-assisted outputs to evaluate consistency, speed, and interpretive value.

Before & after synthesis timeline

Comparing time and effort across manual vs AI-assisted workflows

A side-by-side graphic showing the reduction in researcher hours and turnaround time when using GPT-assisted synthesis. Manual efforts required multiple iterations and cross-checks; the AI-assisted version delivered a usable first draft in under half the time, with human review added for final refinement.

Custom prompt template structure

Engineering clarity and consistency through reusable templates

A modular prompt framework that guided the AI’s role, tone, formatting, and clustering logic. Templates included example inputs and targeted outputs tailored for UX synthesis. This ensured reproducibility across projects.

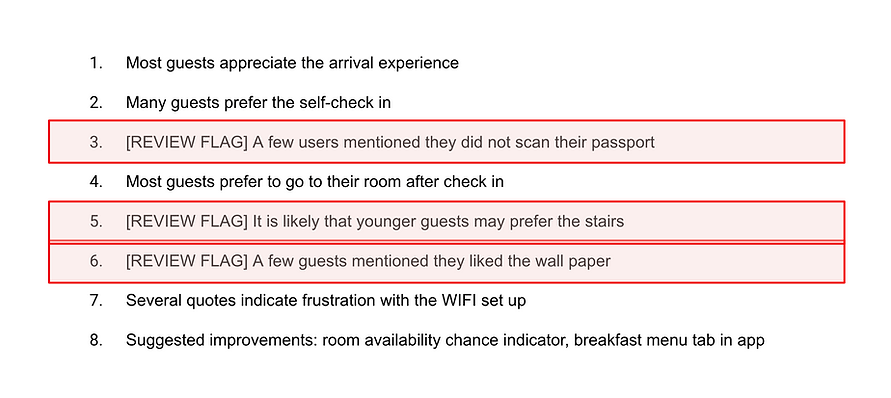

AI summary with review flags

Identifying where human review adds value

An example of an AI-generated summary with highlighted “review flag” markers—sections where tone, specificity, or logic required human input. This helped researchers focus review time where it mattered most.

Key takeaway

Navigator methods & frameworks used

-

Four Shifts of AI UX

-

Prompt design & evaluation protocol

-

Human-in-the-loop QA checklist

-

GenAI onboarding for research teams

This helped us get to a solid first draft in half the time. More importantly, it freed us up to focus on the higher-value things, like digging into the ‘why’ behind what users were saying.

– UX Researcher, citizenM hotels